Kubernetes at Seed Stage

Now I know what you, dear reader, would be thinking just by reading the title, “Kubernetes in an early stage startup? This is like bringing a gun a to a knife fight” or “Kubernetes is an overpowered solution at such a small scale and does not make sense” and so on. But do give me a chance to present its benefits for early stage startups before you decide to move on.

Before we begin, it would be prudent to introduce Kubernetes to everyone, just on the off-chance that you’re not familiar with it. Kubernetes, or k8s as it is fondly known to its users, is a at its core a container orchestration framework which allows us to automate deployment, management and scaling of services. This allows us to automate the effort that would go into handling operations such as rolling updates and high availability.

The traditional stereotypes associated with k8s are that it is a massive framework requiring a dedicated team to handle the cluster as well as ensure its continued operation. Another common stereotype associated with the framework is that it is time taking and painstakingly difficult to setup. But these are not really that accurate anymore.

While k8s definitely requires dedicated teams and engineers to maintain at a scale, the same is not true when used to orchestrate the small number of workloads at an early stage startup. At Dashtoon we are a team of 5 engineers, working on our own product tasks, who are also responsible for the continued operation of the cluster. Each and every one of us is comfortable with making changes to the cluster, be they for deploying a new service, or creating a new ingress and so on.

Coming to the aspect of setting up the cluster, it has now become ridiculously easy to be up and running with a production scale cluster with the help of managed solutions provided by cloud providers such as Azure and AWS. In fact, we were able to bring up our cluster and serve production traffic within an hour using one of the managed solutions.

Now that I’ve mentioned about the existing stereotypes and their relevance, lets move on to how we use the framework at Dashtoon and how it makes our life easier as compared to the previous setup that we had.

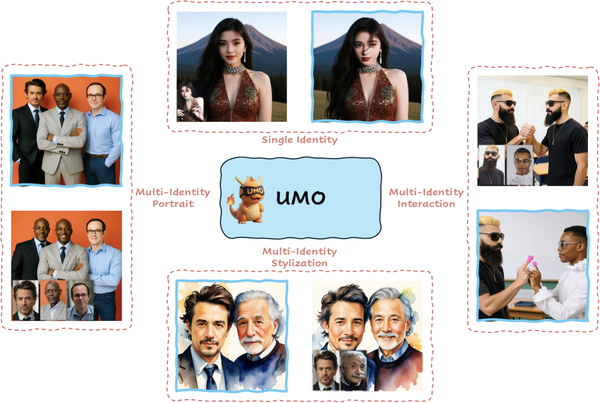

First, a bit of an introduction to Dashtoon. We are a generative AI startup aiming to make it easier to tell visual stories for creators and delighting users with visual content. The idea is to reduce the time and effort it takes to create visual stories and allow the authors to focus on the storytelling.

Due to the small team and scale, we made a conscious decision to go with a mono-repo setup for both the backend and frontend. Our language of choice has been Kotlin for back-end and Dart (via Flutter) for the front-end. Along with this, we’re also self-hosting a setup for stable-diffusion-webui to allow for quick prototyping of model changes and new technologies. Now with all this, we’ve got separation of dev and prod setups to handle along with respective ingresses for each of the service.

At the start, we had started out with running Docker on a VM with each service running in a container. At that time, we had the same bias that k8s is an overpowered solution which would not be needed at such a small scale and would be more hassle than it is worth (how wrong we were!).

So, here is how the setup was :

- A VM hosted on Azure

- Running Docker containers

- nginx container to front the incoming requests, with individual configs for each sub-domain

- watchtower to update the service on each image push

- Secondary VM running docker and basic prometheus and grafana for monitoring

While this was perfectly serviceable, there were several gripes with the setup :

- To scale up a service would mean to bring up another VM with the same set of services

- There were multiple nginx config files, with a lot of boilerplate code. To give you an idea, we had 3 active services and 2 sub-domains for each. This meant that the number of config files was 6 just to start with.

- Separating out dev and prod environments was difficult

- Almost impossible to do rolling updates. For example, to do rolling updates while staying within the docker setup would have meant moving to a docker swarm setup, just to gain the ability to control replicas for each service. To add to the difficulty, watchtower works at a machine level and hence would not be aware of the different machines on the docker swarm. This would have meant finding an alternative which would be aware of the swarm setup.

- RBAC for access to logs and configuration was difficult

- Managing secrets and environment variables required editing the base docker compose file

Taking all of this into account, we decided to give k8s a try due to our prior experience with it while handling infrastructure at udaan and knowing that it provides a solution for each of the above mentioned points. Let me list out the changes to the setup and the subsequent ease that came with k8s.

With k8s now the setup changes to be:

- An k8s cluster, with two nodepools (read VMs). One for controlplane and another for workloads. You would have noticed that I’ve not mentioned this anywhere on the above diagram, and that is because k8s abstracts away the nodes and takes care of the scheduling and scaling for us.

- Three main namespaces :

- dev

- prod

- monitoring

- Both dev and prod namespace has the back-end and the web front-end

- Monitoring now includes open-telemetry and pushes to prometheus which is them visualised on grafana.

- Rolling updates, at 25% unavailability

- RBAC, integrated with Azure AD. This allows us to manage access at AAD group level

- Secrets and config maps mounted as environment variables on the deployments

- Ingress is handled by k8s using ingress-nginx. This allows us to just mention the sub-domain, certificates and the host path

- Ingress is handled by k8s using ingress-nginx. This allows us to just mention the sub-domain, certificates and the host path

- CI/CD pipeline with the ability to target dev and production deployments

Looking at this list should now give you an idea of how easy k8s makes it to manage infrastructure and the various components involved. Migrating to k8s freed up the time that was earlier spent writing nginx configurations and fiddling with docker compose yamls to deploy the new service. To put that into perspective, we had spent about 3-4 man hours to setup the docker workflow and ensure it worked as compared to the 1 man hour it took to setup k8s. And not to forget, reduction in upkeep and maintenance time that k8s automates for us.

Now by this point you must be realising that benefits that k8s brings, but would also be wondering if all of this magic comes at a cost. And to assuage your fears, no it does not increase the costs by much. On most platforms, there are no additional costs for setting up and running the cluster. The costs come purely from the VMs which are used for the node pools. Then why the increase in costs? Well, that is because we end up provisioning an extra VM to host the the system (control plane, if you will) components, according to the good practices for a k8s cluster. Hence compared to the previous docker setup, our k8s cluster comes in at roughly 5-10% additional cost due to the additional VM.

So to summarise,